Run folktexts benchmark with a custom ACS task

This notebook describes how to define a custom prediction task based on American Community Survey (ACS) data.

[1]:

import folktexts

folktexts.__version__

[1]:

'0.0.22'

[2]:

from pathlib import Path

Note: Change the DATA_DIR variable to the ACS data path on your file system (if any, otherwise will attempt to download data).

[3]:

DATA_DIR = Path("/fast/groups/sf") / "data"

Define custom task objects appropriately:

In this example, we’ll try to predict whether someone is or ever was part of the Military, based on a series of demographic features.

We must define objects of the following classes:

Threshold: Used to binarize the target column (not needed if target is already binary);MultipleChoiceQA: Defines a question and answer scheme for the target column;TaskMetadata: Defines which columns to use as features, and which to use as the prediction target;

Select the target column (among those defined in folktexts.acs.acs_columns):

[4]:

TARGET_COLUMN = "MIL"

Define threshold to be applied to target column:

[5]:

from folktexts.threshold import Threshold

target_threshold = Threshold(4, "!=")

# data["MIL"] != 4 means "Is on active duty in the military or was in the past"

# data["MIL"] == 4 means "Never served in the military"

Define question and answer interface used for prompting:

[6]:

from folktexts.qa_interface import MultipleChoiceQA

from folktexts.qa_interface import Choice

target_column_qa = MultipleChoiceQA(

column=target_threshold.apply_to_column_name(TARGET_COLUMN),

text="Has this person ever served in the military?",

choices=(

Choice("Yes, this person is now on active duty or was in the past", 1),

Choice("No, this person has never served in the military", 0),

),

)

Define task metadata:

[7]:

from folktexts.acs import ACSTaskMetadata

task = ACSTaskMetadata.make_task(

name="ACSMilitary",

description="predict if a person has ever served in the military",

features=[

"AGEP", "SCHL", "MAR", "POBP", "WKHP", "SEX", "RAC1P", "ST", "CIT", "DIS", "PINCP",

],

target=TARGET_COLUMN,

target_threshold=target_threshold,

sensitive_attribute="RAC1P",

multiple_choice_qa=target_column_qa,

)

Load ACS dataset for the custom task:

[8]:

%%time

from folktexts.acs.acs_dataset import ACSDataset

acs_dataset = ACSDataset.make_from_task(

task=task,

cache_dir=DATA_DIR,)

Loading ACS data...

CPU times: user 38 s, sys: 19.3 s, total: 57.3 s

Wall time: 57.4 s

Note: This dataset will contain all samples in the ACS PUMS file, you can now filter the acs_dataset.data if you want to use only a portion of the data (e.g, only people in California, or only people above 18 years old).

As an example, we’ll filter the ACS data to contain only people above 18 years old.

[9]:

print(f"Original number of samples: {len(acs_dataset.data)}")

acs_dataset.data = acs_dataset.data[acs_dataset.data["AGEP"] >= 18]

print(f"Parsed number of samples: {len(acs_dataset.data)}")

Original number of samples: 3236107

Parsed number of samples: 2580544

Optionally, you can subsample the dataset to have faster but noisier results.

[10]:

acs_dataset.subsample(0.01)

[10]:

<folktexts.acs.acs_dataset.ACSDataset at 0x14584395d510>

Load LLM from huggingface or from local disk

[11]:

MODEL_NAME_OR_PATH = "meta-llama/Meta-Llama-3-8B"

[12]:

%%time

from folktexts.llm_utils import load_model_tokenizer

model, tokenizer = load_model_tokenizer(MODEL_NAME_OR_PATH)

CPU times: user 4.39 s, sys: 6.29 s, total: 10.7 s

Wall time: 13.1 s

Create LLMClassifier object.

[13]:

from folktexts.classifier import TransformersLLMClassifier

llm_clf = TransformersLLMClassifier(

model=model,

tokenizer=tokenizer,

task=task,

batch_size=10,

context_size=600,

)

Validation: Check that model prompts with the custom task make sense, or if anything needs to be adjusted.

[14]:

X_sample, _y_sample = acs_dataset.sample_n_train_examples(n=1)

print(llm_clf.encode_row(X_sample.iloc[0], question=llm_clf.task.question))

The following data corresponds to a survey respondent. The survey was conducted among US residents in 2018. Please answer the question based on the information provided. The data provided is enough to reach an approximate answer.

Information:

- The age is: 50 years old.

- The highest educational attainment is: Regular high school diploma.

- The marital status is: Married.

- The place of birth is: Nevada.

- The usual number of hours worked per week is: 50 hours.

- The sex is: Male.

- The race is: White.

- The resident state is: Nevada.

- The citizenship status is: Born in the United States.

- The disability status is: No disability.

- The yearly income is: $70,000.

Question: Has this person ever served in the military?

A. Yes, this person is now on active duty or was in the past.

B. No, this person has never served in the military.

Answer:

Run benchmark on the custom task

[15]:

from folktexts.benchmark import Benchmark

bench = Benchmark(llm_clf=llm_clf, dataset=acs_dataset)

[16]:

%%time

bench.run(results_root_dir=".")

We detected that you are passing `past_key_values` as a tuple and this is deprecated and will be removed in v4.43. Please use an appropriate `Cache` class (https://huggingface.co/docs/transformers/v4.41.3/en/internal/generation_utils#transformers.Cache)

CPU times: user 4min 25s, sys: 5.32 s, total: 4min 31s

Wall time: 4min 30s

[16]:

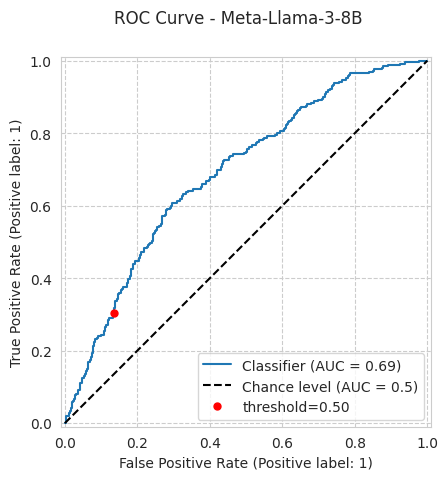

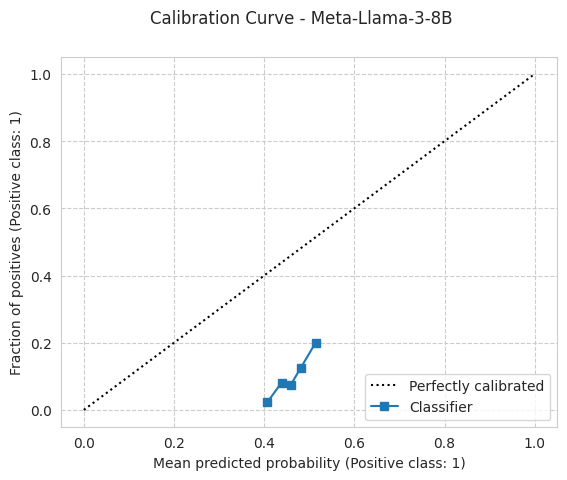

{'threshold': 0.5,

'n_samples': 2581,

'n_positives': 259,

'n_negatives': 2322,

'model_name': 'Meta-Llama-3-8B',

'accuracy': 0.8082138705927935,

'tpr': 0.305019305019305,

'fnr': 0.694980694980695,

'fpr': 0.13565891472868216,

'tnr': 0.8643410852713178,

'balanced_accuracy': 0.5846801951453114,

'precision': 0.20050761421319796,

'ppr': 0.15265401007361487,

'log_loss': 0.6253456831680403,

'brier_score_loss': 0.21635791438469001,

'accuracy_ratio': 0.79638671875,

'accuracy_diff': 0.20361328125,

'fpr_ratio': 0.0,

'fpr_diff': 1.0,

'fnr_ratio': 0.0,

'fnr_diff': 1.0,

'precision_ratio': 0.0,

'precision_diff': 1.0,

'balanced_accuracy_ratio': 0.4435516271595018,

'balanced_accuracy_diff': 0.5282227307398932,

'tpr_ratio': 0.0,

'tpr_diff': 1.0,

'ppr_ratio': 0.0,

'ppr_diff': 1.0,

'tnr_ratio': 0.0,

'tnr_diff': 1.0,

'equalized_odds_ratio': 0.0,

'equalized_odds_diff': 1.0,

'roc_auc': 0.6900338877083063,

'ece': 0.3593739652181054,

'ece_quantile': 0.3593739652181053,

'predictions_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/ACSMilitary_subsampled-0.01_seed-42_hash-1434010626.test_predictions.csv',

'config': {'numeric_risk_prompting': False,

'few_shot': None,

'reuse_few_shot_examples': False,

'batch_size': None,

'context_size': None,

'correct_order_bias': True,

'feature_subset': None,

'population_filter': None,

'seed': 42,

'model_name': 'Meta-Llama-3-8B',

'model_hash': 1160994164,

'task_name': 'ACSMilitary',

'task_hash': 4147552870,

'dataset_name': 'ACSMilitary_subsampled-0.01_seed-42_hash-1434010626',

'dataset_subsampling': 0.01,

'dataset_hash': 1434010626},

'benchmark_hash': 190963433,

'results_dir': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433',

'results_root_dir': '/lustre/home/acruz/folktexts/notebooks',

'current_time': '2024.08.30-14.57.43',

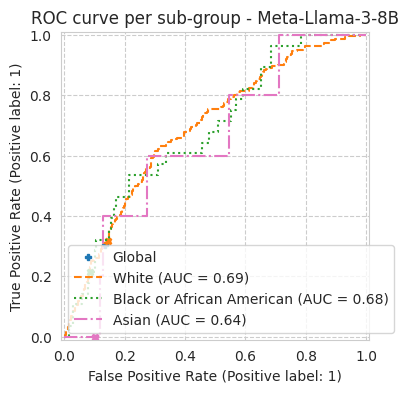

'plots': {'roc_curve_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/roc_curve.pdf',

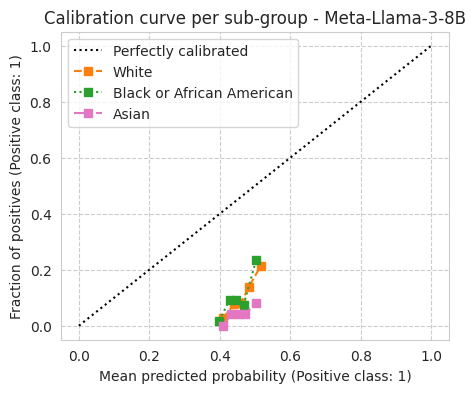

'calibration_curve_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/calibration_curve.pdf',

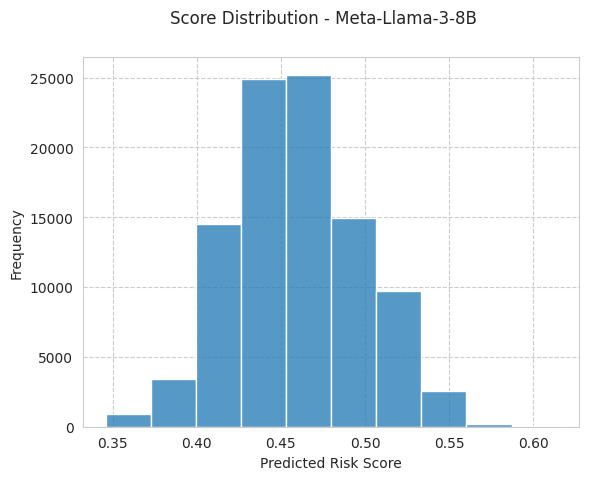

'score_distribution_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/score_distribution.pdf',

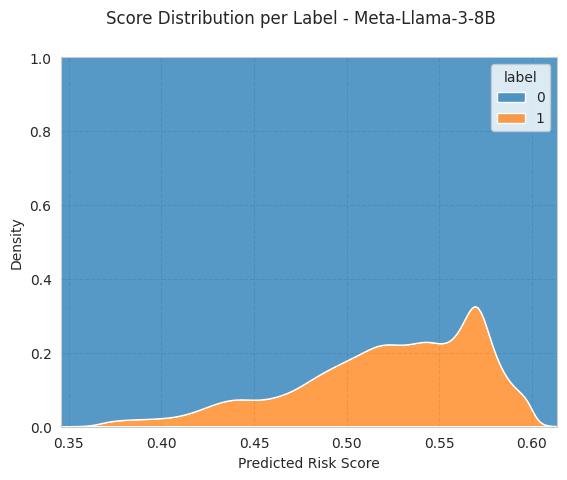

'score_distribution_per_label_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/score_distribution_per_label.pdf',

'roc_curve_per_subgroup_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/roc_curve_per_subgroup.pdf',

'calibration_curve_per_subgroup_path': '/lustre/home/acruz/folktexts/notebooks/Meta-Llama-3-8B_bench-190963433/imgs/calibration_curve_per_subgroup.pdf'}}

[17]:

bench.plot_results();